I recently started studying machine learning and doing related projects full time. I completed Andrew Ng's Machine Learning Coursera course, and his Deep Learning specialization series of five smaller courses. I have also attended the Fast.ai Deep Learning course in person and taken the Deep Reinforcement Learning Nanodegree from Udacity.

Previously I was a lead software engineer at Fetch Robotics where I did front and backend web development as well as product management. Before that I was briefly CEO of my own consumer robotics company where we made furry robot pets. I've also written code for Numenta (machine intelligence research), AnyBots (telepresence robotics), and PBWorks (hosted wikis).

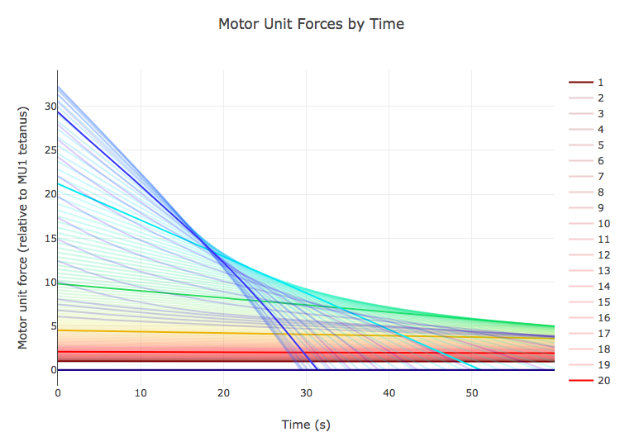

PyMuscle (github) - PyMuscle provides a motor unit based model of muscle fatigue for Python. It simulates the complex per-unit relationship between excitatory input and motor-unit output as well as fatigue over time.

It is compatible with OpenAI Gym environments and is intended to be useful for researchers in the machine learning community.

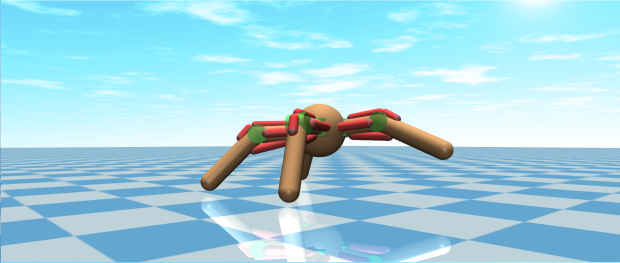

MuscledAgents (github) - MuJoCo environments rigged with muscles (tendons) which use PyMuscle fatigable muscle models.

Each environment replaces motor actuators in the original model with tendon actuators (soon to be MuJoCo 2.0 muscles) and adds a fatigue based penalty to the reward function.

Muscle Control - Controlling muscles is the primary way biological creatures can affect the world. These are dynamic, noisy, inaccurate tools which vary their properties over multiple timescales, and yet biological creatures quickly develop high skill in their control. I work with simulated agents rigged with muscles at various levels of fidelity to explore this task.

Evolved Reward Signals - How do we augment reinforcement learning to overcome the reward shaping problem? Given the Evolutionary Optimal Reward Framework how do we implement environmental pressures such that agents evolve intrinsic rewards sufficient to bootstrap higher level task learning?

Label Free Knowledge Preservation - Catastrophic forgetting / knowledge inteference is the phenomenon where an agent's performance on previously learned tasks degrades as new tasks are learned. While there have been several successful methods to preserve knowledge with explicit task labels how can we extend or modify these methods such that no such label is required?

Fetchcore is the cloud robotics platform for Fetch Robotics. I built the first generation of the backend API and implemented the React-based single page application web interface. Subsequently I grew and lead the team that continued development on the web interface. - Read more ...

Embodied AI, Inc. was a consumer robotics company where I was founder and CEO. Our first product was The Fenn a furry robot companion. We designed and built a prototype robot and debuted at Maker Faire where our "Meet the Fenn" experience was a crowd favorite and winner of a Make Magazine Editor's Choice award. - Read more ...

I am open to hearing about opportunities in machine learning (research and applied) and development supporting the same. While I appreciate the interest, I will politely decline work on core code for self-driving cars or military applications of ML/AI.

email - iandanforth@gmail.com

github - iandanforth

twitter - iandanforth

linkedin - iandanforth